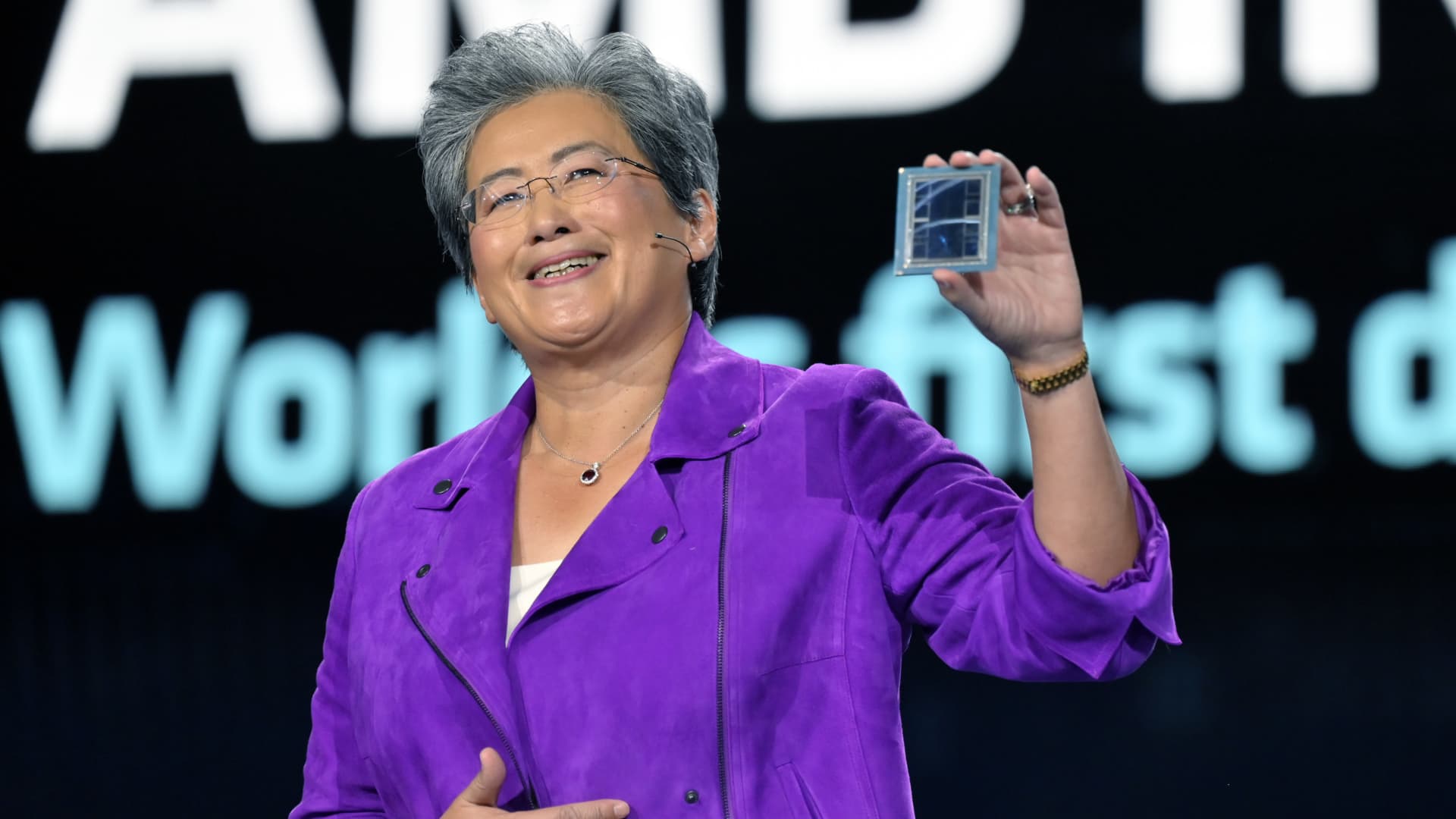

Lisa Su demonstrates the ADM Instinct M1300 chip during her keynote address at CES 2023 at the Venetian Las Vegas on Jan. 4, 2023 in Las Vegas, Nevada.

David Becker | Getty Images

supermicro It said on Tuesday that its most advanced artificial intelligence GPU, the MI300X, will start shipping to some customers later this year.

AMD’s statement represents the most powerful challenge yet NvidiaAccording to analysts, it currently dominates the AI chip market with a market share of more than 80%.

GPUs are the chips that companies like OpenAI use to build cutting-edge artificial intelligence programs like ChatGPT.

If AMD’s AI chips, which it calls “accelerators,” are used by developers and server makers as an alternative to Nvidia’s offerings, it could represent a huge untapped market for the chipmaker best known for traditional computer processors .

AMD CEO Lisa Su told investors and analysts in San Francisco on Tuesday that artificial intelligence is the company’s “largest, most strategic long-term growth opportunity.”

“We think the data center AI accelerator (market) will grow from around $30 billion this year to over $150 billion in 2027 at a compound annual growth rate of more than 50 percent,” Su said.

While AMD didn’t disclose prices, the move could put price pressure on Nvidia’s GPUs, such as the H100, which could cost as much as $30,000 or more. Lower GPU prices may help reduce the high cost of serving AI applications.

AI chips have been one of the bright spots in the semiconductor industry, while sales of PCs, the traditional driver of semiconductor processor sales, have declined.

Last month, AMD CEO Lisa Su said on an earnings call that while samples of the MI300X will be available this fall, volume shipments will begin next year. Su shared more details about the chip in a presentation Tuesday.

“I like this chip,” Su said.

Mi 300X

AMD says its new MI300X chip and its CDNA architecture are designed for large language models and other cutting-edge AI models.

“At the heart of all this is the GPU. GPUs are enabling generative AI,” Su said.

The MI300X can use up to 192GB of memory, which means it can hold larger AI models than other chips. For example, Nvidia’s competitor, the H100, only supports 120GB of memory.

Large language models used in generative AI applications use large amounts of memory because they run an increasing amount of computation. AMD demonstrated the MI300x running a 40 billion parameter model called Falcon. OpenAI’s GPT-3 model has 175 billion parameters.

“Model sizes are getting larger, and you actually need multiple GPUs to run the latest large language models,” Su said, noting that with the increased memory on AMD chips, developers won’t need as many GPUs.

AMD also said it will offer an Infinity Architecture that combines eight M1300X accelerators in a single system. Nvidia and Google have developed similar systems that combine eight or more GPUs in a single box for AI applications.

One reason AI developers have historically favored Nvidia chips is that it has a well-developed software package called CUDA that gives them access to the chip’s core hardware capabilities.

AMD said Tuesday that it has its own AI chip software, called ROCm.

“Right now, while it’s been a journey, we’ve made really big strides in building a robust software stack that works with an open ecosystem of models, libraries, frameworks and tools,” said AMD president Victor Peng. .”