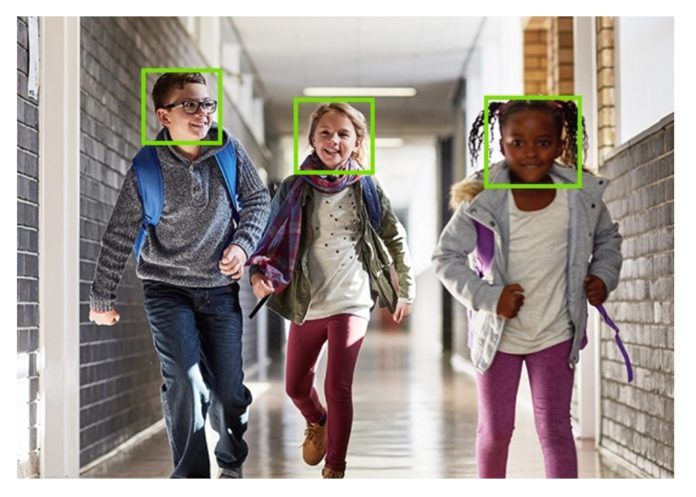

In the age of AI, a new school year brings new challenges, as students increasingly turn to ChatGPT and other AI tools to complete assignments, write essays and emails and perform other tasks previously limited to human agency. Meanwhile, the large language models (LLMs) and other algorithmic engines powering these products rely on mass amounts of training data in huge biometric datasets, leading to questions about how facial recognition and other biometric systems impact childrens’ privacy. A notable case is unfolding in Brazil, where human rights campaigners want the government to ban companies from scraping the web for biometric data.

AI brings some good, many bad outcomes for kids: UNICEF

In a recent explainer article, UNICEF asks how to empower and protect children in a world currently smitten with AI and its promises. It notes research showing that ChatGPT has become the fastest growing digital service of all time, racking up 100 million users in just two months. (By comparison, it took TikTok nine months to reach the same figure.) Kids are using it more than adults, with 58 percent of children aged 12-18 reporting having used the tool, versus 30 percent of those over 18.

“In the face of increasing pressure for the urgent regulation of AI, and schools around the world banning chatbots, governments are asking how to navigate this dynamic landscape in policy and practice,” the piece says. “Given the pace of AI development and adoption, there is a pressing need for research, analysis and foresight to begin to understand the impacts of generative AI on children.”

UNICEF takes a balanced view, noting the ways in which AI systems might benefit kids if used responsibly and ethically for certain applications. These include using generative AI for insights into medical data to support advances in healthcare, and using algorithms to optimize learning systems for kids across the educational spectrum. Many creative workers and artists, however, will take umbrage with the suggestion that AI could be beneficial in offering “tools to support children’s play and creativity in new ways, like generating stories, artwork, music or software (with no or low coding skills).”

AI risks include danger to democracy, child exploitation, possible electrocution

While it leads with the benefits, UNICEF also notes “clear risks that the technology could be used by bad actors, or inadvertently cause harm or society-wide disruptions at the cost of children’s well-being and future prospects.” This section is a much longer catalog of potential harms.

“Persuasive disinformation and harmful and illegal content” could warp the democratic process, increase “online influencer operations,” lead to more deepfake scams, child sexual abuse content, sextortion and blackmail; and generally erode trust until nothing is considered reliable and the philosophical concept of truth vanishes. Interacting with chatbots could rewire kids’ ability to distinguish between living things and inanimate objects. “AI systems already power much of the digital experience, largely in service of business or government interests,” says the piece. “Microtargeting used to influence user behavior can limit and/or heavily influence a child’s worldview, online experience and level of knowledge.” The future of work will be thrown into question. Inequality will increase.

“Amazon Alexa,” says UNICEF, “advised a child to stick a coin in an electrical socket.”

In Brazil, digital watchdog Human Rights Watch has called for an end to the scraping and use of children’s photos for the training of AI algorithms. In an update to their March 2024 submission to the UN Committee on the Right of the Child, the group says data scraped from the web for the purposes of training AI systems without user consent is “a gross violation of human rights” that can lead to exploitation and harassment. It is calling on Brazil’s government to “bolster the data protection law by adopting additional, comprehensive safeguards for children’s data privacy and to “adopt and enforce laws to protect children’s rights online, including their data privacy.”

Synthetic data increasingly an area of interest for training algorithms

If we are to keep kids safe from the potential risks, there is still the question of how to train AI systems that are currently in use with data that reflects the existence of children.

A new paper, entitled “Child face recognition at scale: synthetic data generation and performance benchmark,” addresses “the need for a large-scale database of children’s faces by using generative adversarial networks (GANs) and face-age progression (FAP) models to synthesize a realistic dataset.” In other words, the authors propose creating a database of synthetic faces of children by sampling adult subjects and using InterFaceGAN to de-age them – a novel pipeline for “a controlled unbiased generation of child face images.”

Their resulting “HDA-SynChildFaces” database “consists of 1,652 subjects and 188,328 images, each subject being present at various ages and with many different intra-subject variations.” The data shows that, when evaluated and compared against the results of adults and children at different ages, “children consistently perform worse than adults on all tested systems and that the degradation in performance is proportional to age.” Furthermore, the study “uncovers some biases in the recognition systems, with Asian and black subjects and females performing worse than white and Latino-Hispanic subjects and males.”

Fake face databases could use more funding as interest rises

Synthetic data has piqued the interest of researchers across the AI, biometrics and digital identity spectrum. A paper from Da/sec Biometrics and Security Research Group at Germany’s Hochschule Darmstadt similarly looks at how to achieve child face recognition at scale using synthetic face biometrics. And new research from CB Insights says “we are running out of high-quality data to train LLMs. That scarcity is driving up demand for synthetic data – artificially generated datasets such as text and images – to supplement model training.” A newsletter summarizing the findings says funding for research on synthetic data is uneven, but that international demand is creating opportunities, particularly in data-sensitive industries.

Article Topics

biometrics | children | dataset | facial recognition | generative AI | synthetic data | UNICEF