In 2022, U.S. chipmaker Nvidia released the H100, one of the most powerful processors it makes and one of the most expensive, costing about $40,000 each. The launch appears ill-timed, as businesses seek to cut spending amid rampant inflation.

Then in November, ChatGPT launched.

“We went from a pretty tough year last year to overnight,” said Jensen Huang, Nvidia’s chief executive. OpenAI’s hit chatbot was an “aha moment,” he said. “It creates immediate demand.”

ChatGPT’s sudden popularity has sparked an arms race among the world’s leading tech companies and startups scrambling to get the H100, which Huang describes as “the world’s first computer (chip) designed for generative AI.” Intelligent systems can quickly create human-like text, images, and content.

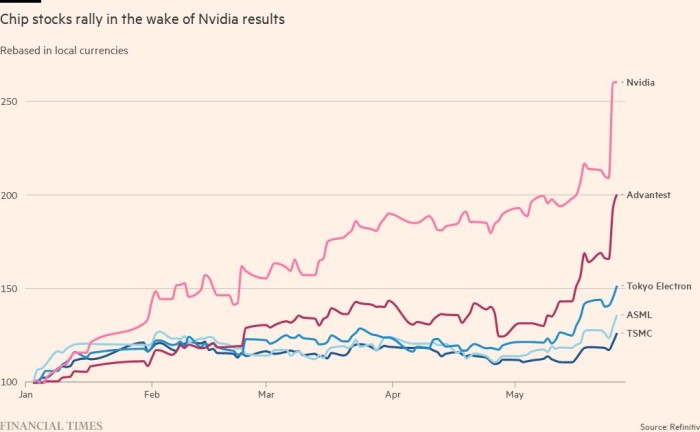

The value of having the right product at the right time became apparent this week. Nvidia announced on Wednesday that its sales for the three months ended July would hit $11 billion, more than 50% higher than Wall Street’s previous estimate, thanks to a revival in data center spending by big tech companies and a boost to its AI chips. demand.

Investors’ reaction to the forecast added $184 billion to Nvidia’s market value in a single day on Thursday, valuing the already world’s most valuable chip company at nearly $1 trillion.

Nvidia was an early winner in the meteoric rise of generative AI, a technology that has the potential to reshape industries, bring huge productivity gains and replace millions of jobs.

That technological leap will be accelerated by the H100, based on a new Nvidia chip architecture called “Hopper” — named after American programming pioneer Grace Hopper — and suddenly becoming Silicon Valley’s hottest commodity.

“It all started just as we were going into production on Hopper,” Huang said, adding that mass production only started a few weeks before ChatGPT’s debut.

Huang’s confidence in continued gains stems in part from being able to work with chipmaker TSMC to scale up production of the H100 to meet explosive demand from cloud providers such as Microsoft, Amazon and Google, Internet groups such as Meta, and enterprise customers.

“It’s one of the scarcest engineering resources on the planet,” said Brannin McBee, chief strategy officer and founder of CoreWeave, an AI-focused cloud infrastructure startup that was among the first to receive H100 earlier this year. One of the shipping companies.

Some customers have waited as long as six months for the thousands of H100 chips they want to use to train models on huge data sets. AI start-ups have expressed concern that the H100 will be in short supply the moment demand takes off.

Elon Musk, who has bought thousands of Nvidia chips for his new AI startup X.ai, said at a Wall Street Journal event this week that GPUs (graphics processing units) are currently “harder to come by than drugs,” joking that “Not really a high standard in San Francisco”.

“The cost of computing has become astronomical,” Musk added. “The minimum bet has to be $250 million in server hardware (for building generative AI systems).”

The H100 has proven particularly popular with big tech companies like Microsoft and Amazon, which are building entire data centers centered around AI workloads, and generative AI startups like OpenAI, Anthropic, Stability AI, and Inflection AI, because of its promise Higher performance over time can speed up product releases or reduce training costs.

“In terms of gaining access, yes, that’s what it’s like to launch a new architecture GPU,” said Ian Buck, head of Nvidia’s hyperscale and high-performance computing business, who has the difficult task of increasing H100 supply to meet demand. “It’s happening at scale,” he added, adding that some large customers are looking at tens of thousands of GPUs.

The unusually large chip, an “accelerator” designed for use in data centers, has 80 billion transistors, five times the number of processors that power the latest iPhones. While it’s twice as expensive as its predecessor, the A100, released in 2020, early adopters say the H100 offers at least three times the performance.

“The H100 solves a scalability problem that has plagued (AI) model creators,” said Emad Mostaque, co-founder and CEO of Stability AI, one of the companies behind the Stable Diffusion image generation service. “This is important because it allows all of us to train larger models faster as this moves from a research problem to an engineering problem.”

While the timing of the H100’s release is ideal, Nvidia’s breakthroughs in AI can be traced back nearly two decades to software innovations, not silicon innovations.

Its Cuda software, created in 2006, allows the GPU to be repurposed as an accelerator for other types of workloads besides graphics. Then around 2012, Barker explained, “AI found us.”

Researchers in Canada realized that GPUs were well suited to creating neural networks, a form of artificial intelligence inspired by the way neurons in the human brain interact, that became a new focus of AI development. “It took us almost 20 years to get to where we are,” Barker said.

Nvidia now has more software engineers than hardware engineers, enabling it to support the many different types of AI frameworks that have emerged in the years that followed, and making its chips more efficient at the statistical computations needed to train AI models.

Hopper is the first architecture optimized for Transformers, an AI approach to powering OpenAI’s Generatively Pretrained Transformers chatbot. Nvidia’s close collaboration with AI researchers led it to spot the emergence of transformers in 2017 and began tweaking its software accordingly.

“Nvidia arguably saw the future earlier than anyone else, and they focused on making the GPU programmable,” said Nathan Benaich, general partner at AI startup investor Air Street Capital. “It saw an opportunity and bet big and stayed ahead of the competition.”

Benaich estimates that Nvidia has a two-year head start on its rivals, but adds: “Its position in both hardware and software is far from unassailable.”

Stability AI’s Mostaque agrees. “Next-generation chips from Google, Intel, and others are catching up (and) even Cuda is becoming less of a moat as software is standardized.”

To some in the artificial intelligence industry, Wall Street’s enthusiasm this week may seem overly optimistic. Still, “for now, the AI market in semiconductors looks like it will be a winner-take-all market for Nvidia,” said Jay Goldberg, founder of chip consultancy D2D Advisory.

Additional reporting by Madhumita Murgia